Introduction to deep learning in Python – Learn deep in Python

Python is a general-purpose language that can be used in many different ways. One of the main uses of Python for Lmdadh (Data Science) and production of deep learning algorithms ( Deep Learning ) is.

Data science has dozens of different and important applications in today’s world, from marketing to pharmacy and search engines. Python has great libraries for deep learning, and this language is one of the main tools of data science.

In this article, we want to teach you the basics of deep learning in Python in very simple language. Familiarity with the Python language and the basic concepts and deep learning techniques are required to benefit from this article.

What is deep learning?

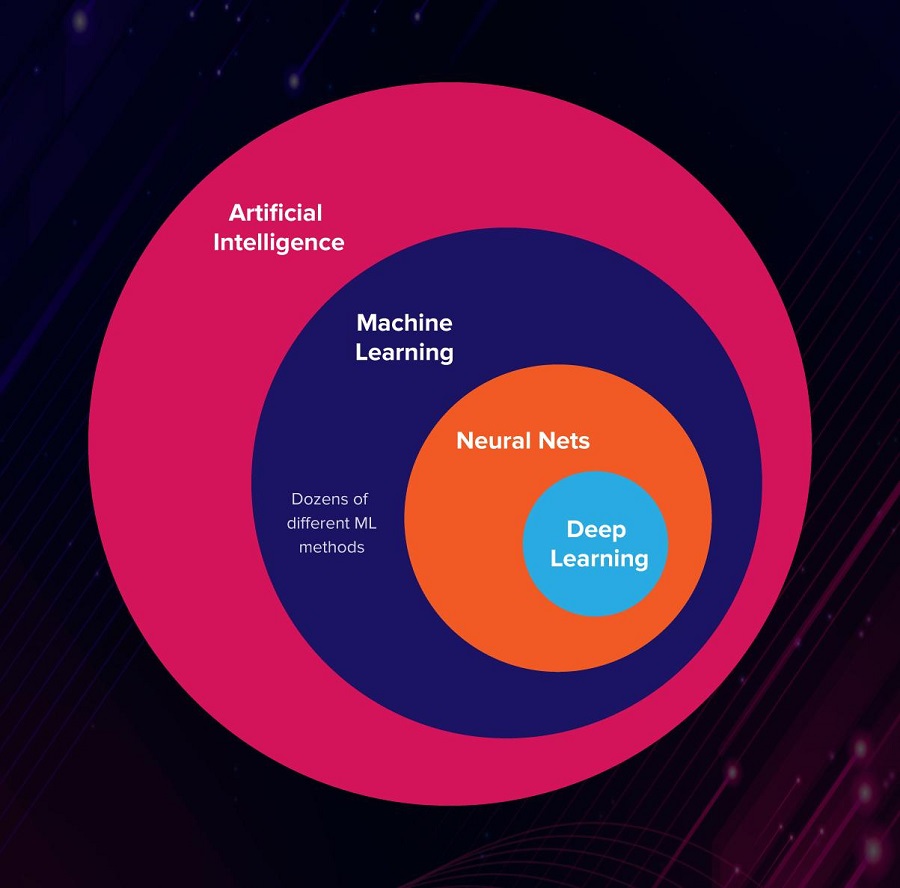

Without even knowing it, many parts of our daily lives are governed by artificial intelligence and data science. One of the most important topics in AI is deep learning. To define the concept of deep learning, we must first define machine learning.

Machine learning is the use of statistics to find repetitive patterns in very large amounts of data. This data may be numbers, words, pictures, clicks, or any other digital phenomenon. Today’s large and well-known systems, such as YouTube and Google, have gained their greatness from the implementation of machine learning.

Deep learning is a subset of machine learning that, by increasing the depth of machine learning, creates a function similar to that of brain cells. In this way, a phenomenon called “artificial neural network” is created, which begins to learn like the human brain.

Deep learning was one of the most important reasons for Google’s huge transformation in 2015. Other applications of deep learning implementation include the automatic detection of typographical errors in online dictionaries.

What are the uses of deep learning?

You’ve probably heard of deep learning and its applications by now. The most important applications of deep learning are:

- Automatic detection of speech

- Photo recognition

- Work on natural language

- Discovery of medicine and toxicology

- Customer Communication

- Bidding system

- Bioinformatics

- Motion Detection

What is it like to work with deep learning in Python?

In-depth learning takes place through special libraries in Python. To work with deep learning in Python, you first need to have Python 2.7 and above installed on your system. In addition, the following libraries need to be installed on your Python.

- Scipy with Numpy

- Matplotlib

- Theano

- Keras

- TensorFlow

It is best to use the Anaconda distribution to access all of these libraries (packages). This distribution includes a large number of Python libraries used in various areas of data science. Another way is to install the mentioned packages one by one on your Python and then import them.

Use the Keras library

One of the easiest ways to access deep learning in Python is to work with data in Keras. Keras is an open source library for developing deep learning models that is very easy to use. With this library, you can build your own artificial neural network with just a few lines of code and practice learning.

Kernas is commonly used alongside other libraries such as numpy. This is because deep learning always requires working with data. To import the desired libraries, we write:

|

1

2

3

4

5

|

<span style=“font-size: 16px;”># first neural network with keras tutorial

from numpy import loadtxt

from keras.models import Sequential

from keras.layers import Dense

...</span>

|

In this example, we are going to upload a database of registered symptoms of people and which of them had diabetes, and through in-depth learning, train an artificial neural network to diagnose the disease.

This dataset can be the link (https: // raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv) download.

Save the file as pima-indians-diabtes.csv. In this database, each data is a set of numbers:

0.137,40,35,168,43.1,2288,33,1

The first eight values are related to the patient’s characteristics and the last value is 0 or one (if one has diabetes, one and otherwise zero). To design a deep learning model, we consider the first eight values to be X and the last value to be Y. The following relationship is established between X and Y:

Y = F (X)

Now we load the data set using the following code:

|

1

2

3

4

5

6

|

<span style=“font-size: 16px;”># load the dataset

dataset = loadtxt(‘pima-indians-diabetes.csv’, delimiter=‘,’)

# split into input (X) and output (y) variables

X = dataset[:,0:8]

y = dataset[:,8]

</span>

|

Model training in cross

Deep learning equals multilayer learning. A model consists of a series of layers that are placed next to each other. This model is called the “hierarchical model” and in it the layers of artificial neural network are constantly being added to reach the desired number of layers. For the data we loaded in the previous step, the input layer contains 8 variables. Here we need to create this layer with the input_dim argument.

The fact that we will need several layers is not a question that has a simple answer. The design of an artificial neural network is usually done by trial and error and no absolute framework can be established for it. In such a situation, the experience of the artificial neural network designer plays a key role.

The goal is to achieve a network that is large enough to understand the structure of the problem (layered). In this example we use a fully connected three-layer network. Dense class should be used for these networks. The first input argument of this class specifies the number of layers, and the activartion argument specifies its status in terms of activation.

|

1

2

3

4

5

|

<span style=“font-size: 16px;”># define the keras model

model = Sequential()

model.add(Dense(12, input_dim=8, activation=‘relu’))

model.add(Dense(8, activation=‘relu’))

model.add(Dense(1, activation=‘sigmoid’))</span>

|

Compile the cross model

We now have a defined model that is ready to be compiled. The compiler uses optimal numeric libraries in the application backend layer (such as Theano or TensorFlow) to compile. Beckan automatically finds the best way to allocate a network for learning and predicting events. The backend also examines the amount of hardware required. One network may be heavier on the graphics card and the other networks may be heavier on the CPU.

|

1

2

|

<span style=“font-size: 16px;”># compile the keras model

model.compile(loss=‘binary_crossentropy’, optimizer=‘adam’, metrics=[‘accuracy’])</span>

|

Embedded cross model

Now that the model has been built and compiled, it is time to synchronize it with the actual data. This is where our artificial neural network begins to learn and recognize like a baby so that it can respond appropriately to events in the future.

Neural network learning is done through an operation called epoch, in which the epoch itself is divided into a number of batch. An Epoch means going through all the rows of the training dataset at once, and a Batch is equivalent to one or more samples that are considered by the neural network at any given time (before updating the weights). By setting the value of these two (according to the target database), we are going to prepare our model to face real situations.

|

1

2

|

<span style=“font-size: 16px;”># fit the keras model on the dataset

model.fit(X, y, epochs=150, batch_size=10)</span>

|

Cross model evaluation

Here we come to the part where we talk about the middle ground. Now we can evaluate the network performance on the database. This evaluation will tell us how successful we have been in data modeling. Of course, it should be noted that with this evaluation, the performance of this network on other datasets can not be guessed.

|

1

2

3

|

<span style=“font-size: 16px;”># evaluate the keras model

_, accuracy = model.evaluate(X, y)

print(‘Accuracy: %.2f’ % (accuracy*100))</span>

|

In general, a program for practicing artificial neural networks with datasets is as follows:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

<span style=“font-size: 16px;”>from numpy import loadtxt

from keras.models import Sequential

from keras.layers import Dense

# load the dataset

dataset = loadtxt(‘pima-indians-diabetes.csv’, delimiter=‘,’)

# split into input (X) and output (y) variables

X = dataset[:,0:8]

y = dataset[:,8]

# define the keras model

model = Sequential()

model.add(Dense(12, input_dim=8, activation=‘relu’))

model.add(Dense(8, activation=‘relu’))

model.add(Dense(1, activation=‘sigmoid’))

# compile the keras model

model.compile(loss=‘binary_crossentropy’, optimizer=‘adam’, metrics=[‘accuracy’])

# fit the keras model on the dataset

model.fit(X, y, epochs=150, batch_size=10)

# evaluate the keras model

_, accuracy = model.evaluate(X, y)

print(‘Accuracy: %.2f’ % (accuracy*100))</span>

|

If you copy this program to Python on your system and save it as keras_first_network.py.

It can be run via the following command (note that the pima-indians-diabetes.csv database file is in the same folder):

|

1

|

<span style=“font-size: 16px;”>python keras_first_network.py</span>

|

With a typical system, running this program takes about 10 seconds and the output will be as follows:

|

1

2

3

4

5

6

7

8

9

10

11

|

<span style=“font-size: 16px;”>768/768 [==============================] – 0s 63us/step – loss: 0.4817 – acc: 0.7708

Epoch 147/150

768/768 [==============================] – 0s 63us/step – loss: 0.4764 – acc: 0.7747

Epoch 148/150

768/768 [==============================] – 0s 63us/step – loss: 0.4737 – acc: 0.7682

Epoch 149/150

768/768 [==============================] – 0s 64us/step – loss: 0.4730 – acc: 0.7747

Epoch 150/150

768/768 [==============================] – 0s 63us/step – loss: 0.4754 – acc: 0.7799

768/768 [==============================] – 0s 38us/step

Accuracy: 76.56</span>

|

Conclusion

Python is a practical tool in various fields of computer science, software engineering and data science. It is currently one of the top ten programming languages in the world in terms of popularity, usage and employment.

It can be said that many of today’s advances in the digital world are due to the capabilities of the Python programming language.

The language allows data scientists to train artificial neural networks with in-depth learning algorithms and use them in a variety of applications, such as search engine optimization or finisher detection. Python has numerous libraries for working with data, big data and machine learning.

Use of these tools depends on familiarity with the basics of data science and Python. In this article, we tried to give you a practical example of how to implement a deep learning algorithm in Python and teach an artificial neural network to diagnose diabetes.