The Most Important Car Never Built – Computing Machine

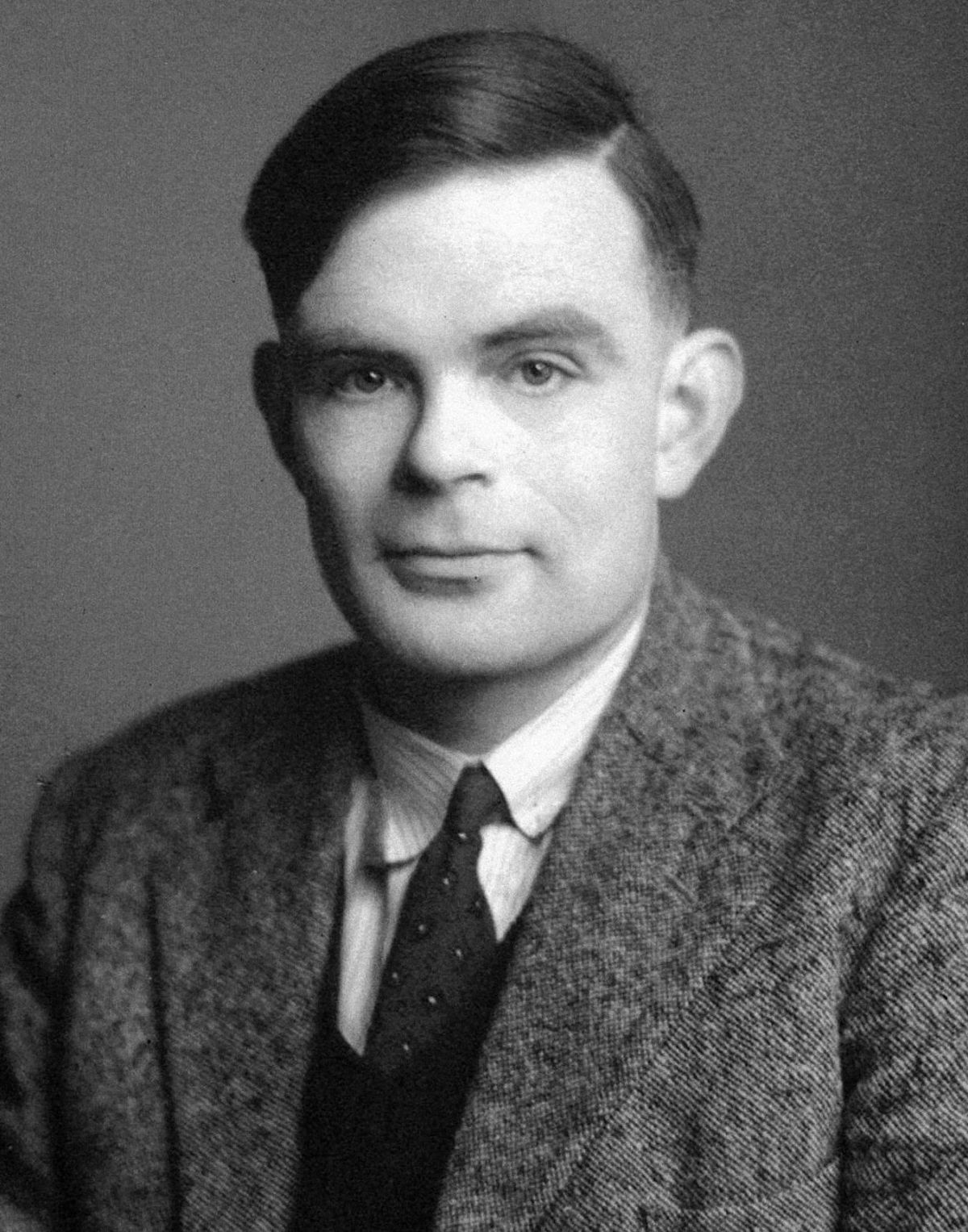

The Famous Mathematician Alan Turing Had The Idea Of Building A Computing Machine In Mind, Which For Some Reason, Was Never Built.

Computing is a familiar concept that most of us understand intuitively. Consider the function (f(x) = x + 3), where when the value of x is equal to three (f(3) = 3 + 3), the solution of the function becomes six.

The described function is computable. But some parts are not that simple, and it is not easy to determine whether they can be computed, which means they may never give us the final answer.

In 1928, German mathematicians David Hilbert and Wilhelm Ackermann proposed a problem called the Entscheidung problem. Over time, their situation led to the formal definition of computability, allowing mathematicians to answer many new issues and laying the foundation for theoretical computer science.

According to Quanta Magazine, the definition mentioned above was the result of the thought of a 23-year-old student named Alan Turing, who wrote an entire article in 1936 that not only formalized the concept of calculations but also proved a fundamental question in mathematics and established the intellectual foundation of the invention of the electronic computer.

Turing wanted to give a concrete answer to the computational problem in the form of an abstract machine. This machine was later named the Turing machine by his doctoral advisor Alonzo Church.

A Turing machine is an abstract concept because it does not (and cannot) exist as a concrete device. It is a conceptual model of computation: if a machine can compute a function, then that function is computable or computable.

In the following, the operation of the Turing machine is explained.

A Turing machine can read and manipulate symbols on an infinitely long tape according to a table of rules. The video consists of houses, each of which can store a character, and the machine reads and overwrites the contents of each home with the tape head.

Each rule in the table determines what the machine should do based on its current state and the symbol it reads. The device can enter a final state (accept form or reject form), after which it stops accepting or rejecting the input, or it can fall into an infinite loop and continue reading the tape forever.

The best way to understand a Turing machine is to consider a simple example. Imagine a machine designed to tell us whether a particular input is zero.

We use the input number 0001 and the (#) symbol (#0001#) to demonstrate this, so #0001# is the desired field on our tape.

The machine starts in the initial state, which we call q0. The engine starts from the leftmost houses on the bar and reaches the # symbol (with the numbers inside). The rules say that when you are in state q0, if there is a # symbol, ignore it, move one cell to the right, and change the machine’s state to q1. After this step, the device is in the q1 condition, and its head reads the second symbol, i.e., 0.

Now we look for a rule that applies to this condition and find one that says: stay in q1 and move the head to the correct cell. This causes us to stay in the same position (in q1 mode, reading 0).

So, we keep moving to the right until the head finally reads a different number, 1. When we recheck the table, we find a new rule: if you encounter a 1, go to state q2, which is the reject state. The machine stops and answers “no” to the initial “Is 0001 zero” question.

When we examine the table, we find a rule that says that this means that the device enters state q3, the acceptance state. The engine now answers “yes” to whether 0000 is zero. But if the input is #0000#, the machine will encounter # after all those zeros.

Alan Turing helped define computation, algorithms, and what became known as the Turing machine.

Alan Turing helped define computation, algorithms, and what became known as the Turing machine.

With his abstract machine, Turing created a model of computation to answer the decision problem, which asks the question: Given a set of mathematical principles, is there a mechanical process (a set of instructions that we now call an algorithm) that always determine the truth of a particular proposition?

Suppose we want to find an algorithm to tell us whether a particular chess position is possible. Can we follow a limited step-by-step sequence of methods to reach the desired position? Here, the rules of chess govern the allowed moves.

Although some situations may take more than our lifetime to analyze (the algorithm may generate all possible problems and compare each of them with the input), such an algorithm exists in the game of chess. Consequently, we say that chess is “decidable.”

However, in 1936, Church and Turing independently proved (using different methods) that there is no general solution to all possible instances of the decision problem. No algorithm can determine whether or not a particular pattern will emerge from the initial way. For example, some games, such as the Game of Life, designed by John Horton Conway, are not decidable.

Turing showed that a function is computable if an algorithm can perform the desired task. Meanwhile, he showed that an algorithm is a process that a Turing machine can define. Therefore, a computable function is a function for which there is a Turing machine. This definition may seem like a convoluted way to define computability, but it is our best definition.

“There’s no other way to define it,” says Michael Sipser, a theoretical computer scientist at the Massachusetts Institute of Technology. I think it is generally accepted that the Church-Turing thesis states that the informal concept of an algorithm corresponds to what any reasonable computational model can do. »

Other mathematicians have come up with various models of computation that appear quite different on the surface but are equivalent:

They can perform any calculation that Turing machines can achieve and vice versa.

Just a few years after Kurt Gödel proved that mathematics is imperfect, Church and Turing showed that some problems in mathematics are undecidable and that no algorithm, however sophisticated, can tell us whether the answer is yes or no.

Both cases were considered fatal blows for Hilbert, who hoped that mathematics would have clear and flawless answers. If there were a general solution to the decision problem, it would mean that entire mathematical issues could be reduced to simple mechanical calculations.

Apart from answering these fundamental questions, the Turing machine also directly led to the construction of modern computers through a version called the “universal Turing machine.”

A universal Turing machine is a particular type of Turing machine that can simulate any other Turing machine based on any input. This version of the Turing machine can read the descriptions of other Turing machines (their rules and input tapes), simulate their behavior on its input tape, and produce the same output as the unreal engine has, Just like today’s computers can read and execute any program.

In 1945, John von Neumann proposed a type of computer architecture called the von Neumann architecture, which implemented the concept of a universal Turing machine in a real machine.

When Sanjeev Arora, a theoretical computer scientist at Princeton University, teaches this concept, he emphasizes a broader philosophical picture.

“There are two meanings of ‘global,'” he explains. One of the universal concepts is that it can implement any other Turing machine. “But the larger meaning of ‘universal’ is that it can perform any computation you can think of.”

In classical physics, any physical process can be modeled or simulated using algorithms, which can be affected by a Turing machine.

Another unique and helpful type of Turing machine is the “probabilistic Turing machine.” Unlike the standard Turing machine with a specific reaction to each input, the probabilistic Turing machine can have several reactions based on probabilities.

This means the machine can produce different results for the same input at other times. Interestingly, this probabilistic strategy can be more efficient than a completely deterministic approach for some problems.

The idea of probabilistic Turing machines has become helpful in fields such as cryptography, optimization, and machine learning. These abstract machines are perhaps the best evidence that asking fundamental questions can be one of the most valuable things a scientist can do.

Alan Turing helped define computation, algorithms, and what became known as the Turing machine.

Alan Turing helped define computation, algorithms, and what became known as the Turing machine.