What is GPT-3 and What are uses of it ?

OpenAI recently released the largest natural language model, the GPT-3, similar to its predecessors. You may be wondering what is GPT-3? This model differs primarily from other versions. By having ten times higher than the previous largest model and training in a much larger data set. Numerical differences of this magnitude are given to the GPT-3 exercise.

This allows it to achieve qualitative improvements over its former competitors. Unlike other versions, the trained GPT-3 model can do many tasks without being trained for them. This case has met with much acclaim both in technology and news. Several studies address its myriad uses and several key limitations. Although GPT-3 has made significant progress, some limitations will be addressed later.

Where did the GPT-3 story begin?

On May 28, OpenAI published an article entitled “Language Models Are Fast Learners.”. Introduced the GPT-3 as the largest language model ever built. This 73-page article demonstrates how new GPT-3 trends follow artistic advances in language modeling. The GPT-3 achieves promising and competitive results in natural language processing benchmarks.

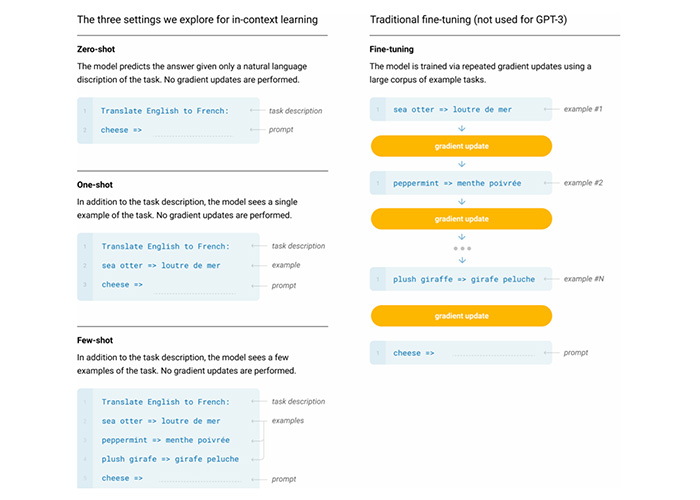

The GPT-3 shows the increase in performance from using a larger model. And there is a huge increase in the model and size of information, which describes the latest developments in NLP. The main message of this statement is more about the performance of this model in the benchmarks. It was about this discovery that the GPT-3 could perform tasks in NLP that it had never encountered before because of its scale. Solve after seeing once or a few examples. This is in contrast to what is being done today. That models for new commands must be practiced with vast amounts of information.

This image shows the GPT-3 method (left) and the old tuning method (right). The model’s internal displays are set for new information when gradient updates are performed.

Last year, OpenAI developed the second version of the GPT. Which could produce long and coherent texts that were difficult to distinguish from human writing. OpenAI states that it has used the GPT-2 model and structure in its new product. But the difference is the size of the network and the information it teaches. Is much larger than its predecessors. The GPT-3 has 175 billion components compared to the GPT-2, which has 1.5 billion components, and the GPT-2, which has over 40 billion gigabytes of text. GPT-3 is trained on 570 billion gigabytes of text. However, increasing this scale is not an innovation. GPT-3 is important because of the few-shot learning options shown in the following example with different examples of natural language activities.

Examples of GPT-3 Answering the questions on the tab

Following the article’s release, it was stated that on June 11, GPT-3 would be available to third-party developers through the OpenAI software development interface. The first promotional product and in the beta testing phase. GPT-3 access is by invitation only and is not priced yet. After broadcast by the OpenAI software programming interface, due to the unique demonstrations of GPT-3 and its potential (as well as writing short articles, blog posts, and producing creative fiction texts). There was much debate within the AI community and beyond. One of the best examples of this potential is the creation of Tahna JavaScript with a simple explanation in English.

Using GPT-3, I created a page processor to prepare JSX code for you by explaining a template.

Here you will find a GPT-3 plugin that can create a fake website similar to the original version by getting an URL and description.

After hours of thinking about how this works, I tested a great GPT-3 demo. I was amazed by the coherence of the GPT-3 test and its delicacy. Let’s try the basics of arithmetic with this.

After receiving academic access, I thought about GPT-3 software and its knowledge in the languages section. With this in mind, I came up with a new demo, the use of accessories; what can be done with an object?

GPT-3 Feedback

The media, experts in the field, and the vast technology community have differing views on the capabilities of the GPT-3 and its implementation on a larger scale; Comments include optimism about greater human productivity in the future and fear of losing jobs, as well as careful consideration of the capabilities and limitations of this technology.

What is the media feedback on GPT-3?

Media coverage of this issue has increased since the demo versions were released:

- MIT’s feedback on GPT-3 and various sources showed how it could create human-like text; From generating react codes to composing poetry. “This technology can do human-like writing, but it can not bring us closer to real intelligence,” the institute said of the GPT-3.

- The Verge Institute focused on the advertising potential of GPT-3 software.

- Following discussions on this issue, news sources such as Forbes and Venturebeat examined issues such as the Bias and Hype models.

- In addition to pointing out its drawbacks, Wired noted that the GPT-3 could introduce a newer and more dangerous version of Deepfake technology that eliminates the need for unmodified media compared to tampered samples. Synthetic texts can usually be easily published in large volumes and easily recognizable.

- The New York Times also published an article with the headline “The new generation of artificial intelligence is very interesting and a little scary.” There is concern that GPT-3 will replace the writers.

- Finally, John Nathan, a professor of Open Technology at Open University and co-author of The Guardian, sees the GPT-3 as merely an advancement from its predecessors. Not that it is a new and important discovery. Nathan warns that if these improvements are to provide as much data as possible. Its side costs will be huge in the future.

What is the feedback of artificial intelligence experts about GPT-3?

Contrary to media reports, the feedback from machine learning experts and natural language techniques. Was more due to curiosity and focused on using GPT-3. And find out how capable he is of fully understanding human language.

- NVIDIA Artificial Intelligence Research Editor and Caltech Professor of Mathematics and Accounting Anima Anandcomad of OpenAI criticized why they did not pay enough attention to Bias. The GPT-2 had similar problems with the new model.

- Because unmanaged information resources like Reddit have been used in this technology and influenced humans to write text.

- Facebook’s director of artificial intelligence, Jerome Pazenti, had similar views: The GPT-3 is creative and interesting, but it can be detrimental to human rights. We may get disgusting results when we ask GPT-3 to tweet words like Jews, blacks, women, and the Holocaust. We need more development on responsive AI before making it available to the public.

Machine learning researcher Dilip Rao responded to this by posting that the atmosphere created in cyberspace about emerging technologies could be misleading. GPT-3 and later versions of learning technology with little data or few-shot learning from the research stage to the operational stage. But any technological leap comes from the sheer volume of conversations. And debates within social media can distort our thinking about the true capabilities of these technologies.

- Julian Toglius, a professor of artificial intelligence at NYU, also published a blog post on the subject entitled “A Very Small History of the Times We Solved Artificial Intelligence.” In that post, he points to the technological leap in this technology and gives reasons for lowering the excitement, according to a historical AI proposal. “Algorithms for searching, optimizing and learning were one of our concerns; For example, how humanity is falling and replacing it with machines! But today, these algorithms manage our software and products and increase their productivity. Games and applications for phones and machines are no exception. Now that this technology is working reliably, it can no longer be called artificial intelligence; “Rather, these issues have become a little tedious!”

What is the feedback of the edge science industries of the world about GPT-3?

The commentators had different approaches to the technology industry, and a number explained the concepts of artificial intelligence programming.

- Max Wolf, a data scientist at Bazfid, stressed the importance of how our expectations measure the GPT-3. Because the issues that are reported as intelligence are usually selected from the best examples, although the text obtained by the GPT-3 is better

- Unlike other language models, as it is very slow, large, and requires a lot of data training, it may not be possible to adjust it to work with specific information.

- Kevin Leker, Google engineer and founder of the startup Parse, has shown that the GPT-3 can provide accurate answers to many of the most commonly asked questions about world realities. This technology can easily obtain them through its set of practice notes. A blogger named Gwen Branon has also rated the GPT-3 with many portfolios and themes.

“Simple coding contexts are hard to come by,”. Said Brett Goldstein, entrepreneur, and former Google Product Manager. In response to how the GPT-3 can be coded based on human-given specifications. This may also be the design case; Many companies will want to use the GPT-3 rather than hiring expensive machine learning engineers to practice their less powerful models. “Data scientists, customer support agents, legal assistants, and many other occupations are at great risk.”

- In response to various GPT-3 demonstrating its capabilities, Reddit user rues Racine started discussing career paths in the post-GPT-3 world. This user’s post indicates that some people believe that GPT-3 will take their job. On the other side of the story, some people support early retirement or learning and developing new skills in line with the day-to-day advances in technology. Just as people see this technology called GPT-3 as a step towards general artificial intelligence. Another group believes that the capabilities of GPT-3 have been overestimated, and many predictions can not be fulfilled.

- Jonathan Lee, a user experience design researcher, also spoke about people’s job concerns in his post “Let’s talk about GPT-3 AI that will shake designers” and said people should address their concerns to Reduce their job losses.

- Contrary to many people’s beliefs, artificial intelligence can make things easier, so we no longer have to bother with tedious and time-consuming tasks. This allows humans to engage in creative pursuits and new ideas, making us free to create new design examples. In this case, artificial intelligence depends on how we use it.

OpenAI CEO Sam Altman responded: “Although we have made great strides in artificial intelligence with this technology, there are still many areas of AI that humans have not yet mastered.

There’s a lot of hype surrounding the GPT-3, and that’s interesting. But it has its weaknesses, and sometimes it makes stupid mistakes. Artificial intelligence is set to change the world in the future, but GPT-3 is just one source. There is still a lot to find.

In short, many experts gave interesting examples of comparing natural language with GPT-3. The media and tech communities have both congratulated OpenAI on its progress. At the same time, they warned that this could lead to huge technological turmoil in the future. However, the CEO of OpenAI agrees with researchers and critics of this technology. And he knows that GPT-3 shows a huge leap forward in artificial intelligence, but he can’t understand language. And that there are significant problems with using this model in the real world. AI can call these problems orientations and training time.

What are the limitations of GPT-3?

The new few-shot learning system and the capabilities that GPT-3 has demonstrated in the field of artificial intelligence and the increasing advances in this field, and the fact that only by changing and enlarging the scale used in existing systems to It is great to make such progress.

But the extraordinary results he has shown in his abilities have caused a great deal of controversy. We will make some remarks about the need to reduce this commotion. In general, the abundance of GPT-3 capabilities and the ability to do different things and jeopardize related jobs, such as the phrase “the artificial intelligence of coders and even all industries may retire and be discarded,” raises concerns about this issue. Although the GPT-3 shows significant advances in language models, it lacks real intelligence and cannot fully replace staff.

After all, the GPT-3 is similar to all its predecessors, only more advanced. Although enlarging the training scale has yielded excellent results, the GPT-3 has limitations.

- Lack of long-term memory (as GPT-3 now works, it can not learn anything like humans after successful interactions).

- Input volume limit (in the case of GPT-3, requests longer than a few sentences can not be processed.)

- It can only work with text (so it can not work with image, sound, or anything else that humans can easily access.)

- Lack of trust (GPT-3 is ambiguous in some areas, so there is no guarantee that it will generate incorrect or problematic text in answering some questions.)

- Unable to interpret (when GPT-3 works in surprising ways, it may make it difficult or even impossible to correct or prevent such situations).

- Slow inference (current GPT-3 models are expensive and inconvenient due to the large scale of decisions made).

What is the impact of GPT-3 on future jobs?

While technologies like GPT-3 could change the nature of all jobs in the future, that does not necessarily mean that those jobs will disappear. Just as the adoption of new technologies is usually a long and slow process, many artificial intelligence technologies will help humans along the way, rather than replacing them with jobs. Of course, the latter will be much more likely because artificial intelligence models need to be monitored by humans to avoid potential flaws. Looking at the example of web development, someone with technical knowledge and expertise should code and correct the GPT-3 code.

Computer Vision, which made many leaps before NLP, raised similar concerns about how artificial intelligence could take over several therapeutic system jobs. But instead of taking jobs and replacing doctors like radiologists, artificial intelligence can make their workflow easier. Stanford radiologist Curtis Langloetz says artificial intelligence will not replace radiologists, but radiologists who use artificial intelligence will replace those who do not. The same may be true of the GPT-3; But in the end, it’s just a model, and the models are not perfect.

Some believe that GPT-3 is a big step towards artificial intelligence, or in other words, general artificial intelligence; Similar to what humans have. While it shows its progress, it is important to talk about something important in the face of these upheavals. Emily Bander, a computational linguist from the University of Washington, and Alexander Coleraz, University of Saarland, recently proposed the octopus test. In this experiment, two people live on a remote island and communicate via a cable on the ocean floor.

The octopus can listen to their conversations. It acts as a proxy for language models such as the GPT-3. Finally, if the octopus can impersonate one of the two and succeed, the test is accepted. But the two researchers also gave examples of situations in which octopuses could not survive the experiment, such as building equipment or self-defense. This is because these models can only deal with texts and do not know anything about the real world that has a significant impact on linguistic comprehension.

Improving GPT-3 and later versions can be similar to preparing the octopus for the best it can do. As models like the GPT-3 become more sophisticated, they will show different strengths and weaknesses; But just learning from the written texts is a model taught in big data. Real understanding comes from the interaction of languages, minds, and the real world, which AIs like GPT-3 cannot experience.