One common error in Google Webmaster is the error in which the message ” Google bot cannot access CSS and JS files ” is displayed to you, which indicates that Google does not have access to CSS and JS files in WordPress. You may see this error in the management dashboard of Google Console, or it may be emailed to you by Google Console and inform you to fix it. In addition to that, this email also describes, in general, what you should do to fix it, but the problem here is that we use WordPress, so a beginner in WordPress who is not familiar with these issues will not understand. How to fix the Google bot cannot access CSS and JS files error in WordPress; in this tutorial, I will address how to fix this error that is displayed in the Google search console, so stay tuned until the end.

Fix the Google bot cannot access CSS and JS files error in WordPress

Before we talk about how to fix this error, we need to talk a little more about it and the reason for its occurrence, and in the next step, see what Google needs to access the CSS and JS files mentioned above. As I understood, this error occurs due to the lack of access to these two files; now, this error can be due to the lack of access to the files specified by the robota.txt file on your side, so the Google robot cannot access such a file.

Let’s assume that in the robota.txt file, you have restricted access to one of the folders that contain some CSS and js files; in this case, when the Google robot enters your site to check your site, in the first step It goes to the robots.txt file to see what directories and files you have allowed access to, so when you block access to a file, in this case, Google is not able to read these types of files and therefore the error message Google bot cannot access CSS and JS files will be emailed to you or displayed in the Google search console for you to fix.

Why does Google need access to CSS and JS files?

Well, as you know, currently, Google has opened a special account on-site optimization, and sites that are optimized in any way, whether for desktop users or mobile users, are given a special score for each of them in search results. Place in higher results, so for Google, your site must be user-friendly in terms of appearance, so to be able to use its artificial intelligence to understand your site just like a normal user, it is necessary to These files are loaded for Google robots, and the possibility of access is available for them. And this is not possible except by accessing the CSS and JS files that play the main role in displaying the site’s appearance for users and search engines.

So, until this point, we knew that Google could view our site just like a normal user with the style and appearance of our template and examine it, so you may have the question whether Google is exactly like a human. Can you view our site? And if so, is there a tool for this to see how Google will see our site?

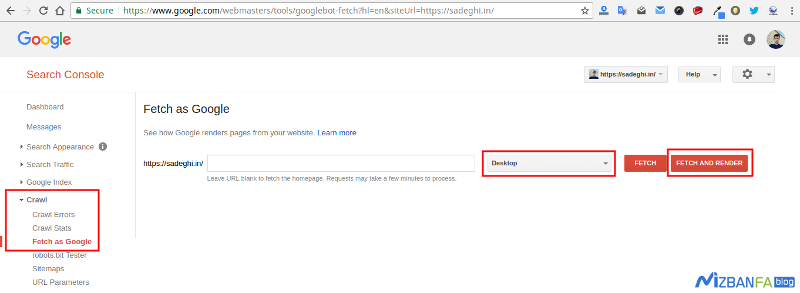

The answer to both questions is positive, to see how Google sees your site just like a normal user, it is enough to go to the Google search console located https://www.google.com/webmasters/tools/home at and then after you have selected your site, go to Crawl > Fetch as Google, now click on the FETCH AND RENDER that you can see in the image below and wait until Google CSS and JS files are available on your site, check them and see the result of Google’s view of your site.

If you are interested in a specific page of your site, just enter the desired page address in the domain address feed that you see and finally click on the FETCH AND RENDER button and wait for your site to be reviewed. After the work is done successfully, a Render will be created for you, wait a little while until this Render is created for you similar to the image below.

Now click on the rendered rendering to see how users and Google will see your site on the open page. If everything is correct, it shows that Google has full access to your WordPress site’s JS and CSS files.

If there is not the same result in these two parts, i.e., the visitor and Googlebot, it means that Google did not have access to the style and JavaScript files properly and the site is not visible to the Google bot as what the users see. In this case, the parts that Google does not have access to will be displayed in the lower part of the left image, in which case you should see in which directory of WordPress those files are located, and if so, through the robots.txt file or the htaccess file. Modify their access disabled.

If in this list, in the Reason column word Blocked in front of any of the files that Google cannot access, this means that Google cannot access the desired file, and you must fix this problem. Therefore, to fix this error, it is enough to act through the robots.txt on the host in the path where you have installed WordPress and provide the necessary access to the style and JavaScript files for the Google robot. For this purpose, first, log in to your host and then refer to the File Manager section of the host.

Then enter the path where you installed WordPress and open the robots.txt file for editing. If there is no file with this title on your host, you can create it, it is enough to create a new file with the same name using text editor programs such as Notepad and then upload it to the public_html path. In addition, you can create a new file by clicking on the Files button located in the CPanel file manager, similar to the image below in the opened window.

This file contains codes similar to the following,

- If the command “allow” is used before each line of code in this file, it means permission to access this folder.

- If the disallow command is used, it means no access to the desired folder or file.

User-agent: * Disallow: /wp-admin/ Disallow: /wp-includes/ Disallow: /wp-content/plugins/ Disallow: /wp-content/themes/

Therefore, if the robots.txt file folders are not accessible /wp-content/plugins/ and /wp-content/themes/ of your site and the disallow command is used, you should change it. For this purpose, just delete the desired line. If you want to block access to a file in a directory, just create the right path in it, rather than removing the main directory from the search engine. The most standard type of access in the default robots.txt file for WordPress is to remove only access to the wp-admin folder from Google robots. So just put the following code in it.

User-agent: * Disallow: /wp-admin/

Now, after you have correctly modified the access to various paths using this file, it is enough to create a new render from the Google search console and check the result again.