All About Chatgpt Artificial Intelligence; The Chatbot That Turned The Internet Upside Down

From Twitter To Tech Media And Microsoft, Chatgpt Is Everywhere These Days; What Is The Reason For All The Fuss About The Chatbot Of The Mysterious Openai Company?

Not so long ago, AI chatbots were awful. But ChatGPT is different from all the chatbots before it. It’s brighter, weirder, and more flexible. He can tell jokes, code, and write academic papers. It can even diagnose the type of disease, create text-based games in the world of Harry Potter, and explain the most complex scientific topics most simply.

ChatGPT chatbot has created such a buzz on Twitter and technology media that almost everyone is talking about it. Some have gone as far as to consider it a replacement for the Google search engine, and some say that it will have the same impact on the world as the iPhone.

In the meantime, some say that ChatGPT has stored all human scientific knowledge in itself, and nothing is left to reach human-like artificial intelligence and a level of self-awareness. Microsoft also plans to invest 10 billion dollars in the company that created this chatbot and bring this technology to the office suite and even the Bing search engine before the end of March.

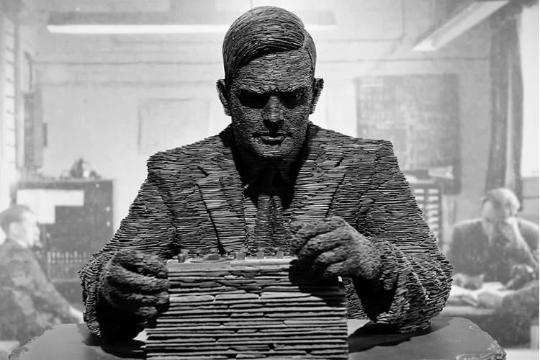

The first chatbot that could successfully pass the Turing test and deceive users about its computer nature was the ELIZA software, which was designed in 1966 by German-American computer scientist Joseph Weisenbaum and matched the users’ responses by matching words.

A simple key would react. Eliza was a simulation of a psychologist who asked the user to describe his problem, then looked for a keyword for the correct answer, and usually continued the conversation with another question.

Now that 57 years have passed since the release of ELIZA, chatbots have reached a point where the world is left with their unique and miraculous abilities. And among them, ChatGPT is the best chatbot the world has ever had the chance to use.

ChatGPT is free to use, But unfortunately, it is almost impossible for us in Iran to access its website. Unless you use an IP change tool and have an actual phone number from another country, for example, the US (a virtual number will not work.)

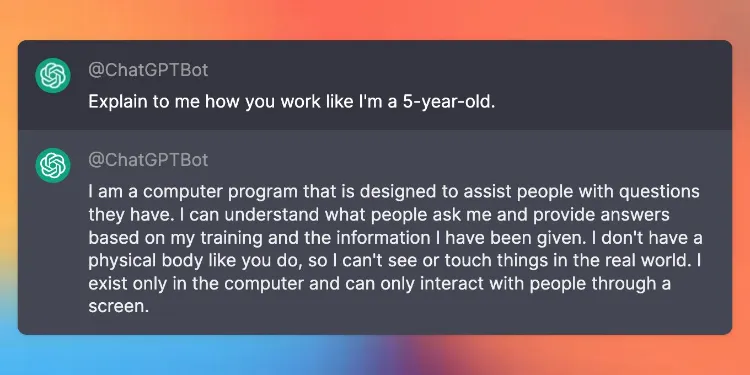

If these conditions are not possible for you, you can try your luck with the Twitter bot of this chatbot. (Although I will say that this bot still hasn’t responded to any of the questions I’ve asked, you’ll either have to be very patient or very persistent!) Many Twitter users ask ChatGPT a question they want to be answered by tagging @ChatGPTBot, and if the bot is proud to answer their question, it retweets it on its page with the answer.

In this article, I have tried to cover all the dark and light sides of ChatGPT. I hope you will accompany me to the end of the article.

What is ChatGPT?

ChatGPT is an experimental chatbot,, the best chatbot ever made available to the public. This chatbot was created by OpenAI and is based on version 3.2 of the GPT language model developed by the same company. OpenAI is the mysterious developer of the brilliant image generator Dall-E, which wants to reach human-like artificial intelligence as soon as possible.

GPT stands for Generative Pre-trained Transformer, an example of artificial intelligence technology known as a large language model, which is automatically trained using a massive amount of data and computing power over several weeks, and based on what is in this After seeing and learning, it produces text.

ChatGPT; Chatbot based on GPT-3.2 language model

The OpenAI company was initially supposed to make the source code of all its projects available to the public for free. Still, after open-sourcing the second version of GPT in November 2019, it abandoned its non-profit structure for good, preventing public access to the source code of its future projects, including updated versions of GPT.

Although GPT-2 was considered a significant achievement at the time, due to access to a limited data set and only 1.5 billion parameters (a pattern in language that models based on the transformer architecture used to make meaningful textual predictions, something like the connections between neurons in the human brain. ), did not have much maneuverability in producing texts that do not become repetitive and boring after a few paragraphs.

The third version of this model with 175 billion parameters was released in the beta phase in June 2020, more potent than LaMDA artificial intelligence with 137 billion parameters.

Interestingly, Microsoft has exclusive access to the GPT-3 source code, and ChatGPT and the GPT 3.5 language model were trained using Microsoft’s Azure AI supercomputer infrastructure.

GPT-3 works wonders in the field of machine learning. The quality of the samples produced using this artificial intelligence is so excellent that it is hard to tell that humans did not write them. And now, an updated and much more advanced version of this model is used in the ChatGPT chatbot, which finished training in early 2022 and was released to the public for free in late November.

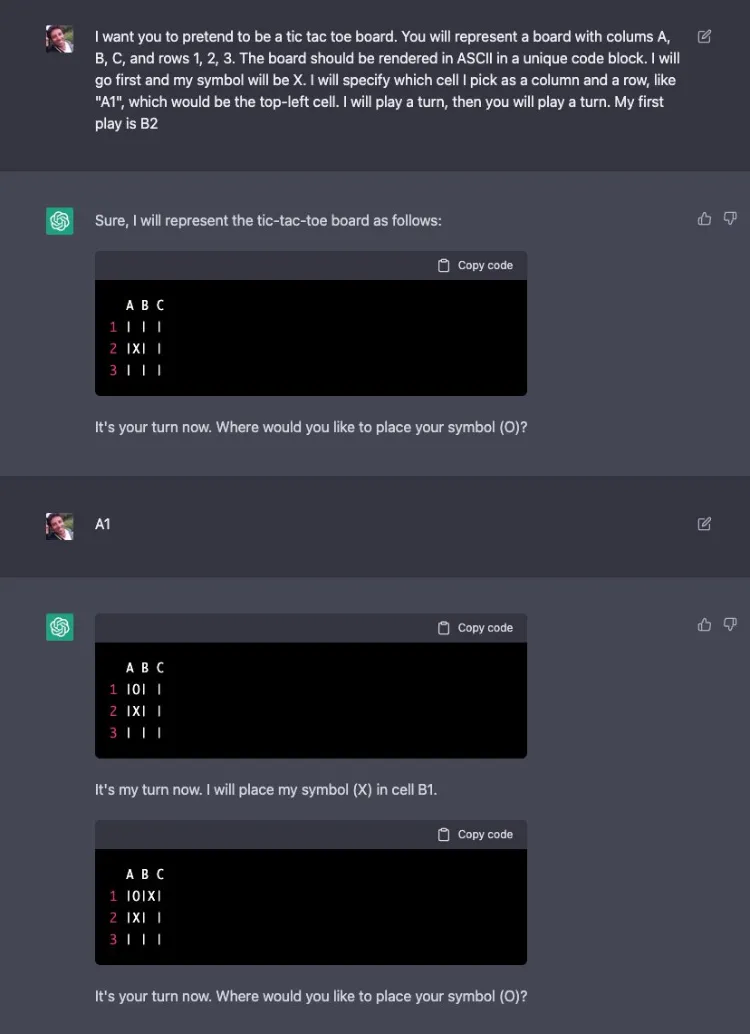

Much has been said about the wonders of ChatGPT. By typing their requests in this chatbot’s straightforward user interface, users get excellent results; From producing poems, songs, and screenplays to writing articles and code and answering any question you can think of; all this is done in less than ten seconds.

It would take a thousand years of human life to read all the data contained in ChatGPT.

ChatGPT can handle even the strangest requests; For example, he can put together a story whose seemingly irrelevant details in the first paragraph are related to the events of the last section (something that rarely happens even in the most popular Netflix series!). I can tell a joke and even explain why it’s funny. He can write catchy leads in the style of various magazines and authors and even use accurate but completely fake quotes. All of these features make ChatGPT an entertaining and addictive tool, but for someone like me whose job is to write, it’s very annoying and troublesome.

The amount of data that ChatGPT was trained with is so vast that it would take “a thousand years of human life” to read it all, according to Michael Wooldridge, director of fundamental research in artificial intelligence at the Alan Turing Institute in London. He says that the data hidden in the heart of this system contains an infinite amount of knowledge about the world we live in.

How does the ChatGPT chatbot work?

The ChatGPT chatbot is based on an updated version of GPT-3, a type of large-scale linguistic model (LLM) that relies on a vast network of artificial neurons that somehow mimic the behavior of neurons in the human brain.

The GPT language model was created based on Google’s transformer neural network architecture. Google used this neural network to build the advanced LaMDA language model, The exact model that a few months ago, a Google employee claimed to be “self-aware” and set off a wave of internet jokes and worries about the emergence of killer robots.

According to Google’s definition, “Transformer produces a model that can be trained to read many words (such as a sentence or paragraph), pay attention to how those words relate to each other, and then predict what it thinks the next words will be.”

In other words, instead of analyzing the input text step by step, the transformer examines the whole sentences simultaneously. It can model the relationships between them for a better understanding of the meaning of the words and according to the situation in which these sentences are used. Since this network performs the analysis in general and simultaneously, it requires fewer steps; in machine learning, the fewer data processing steps, the better the result.

In general, artificial intelligence is “fed” large language models, hundreds of billions of words in the form of books, conversations, web pages, and even posts on Twitter and other social networks, and artificial intelligence builds a model based on statistical probability with the help of these vast sources of data; It means the words and sentences that most likely come after the previous text. In this sense, language models are a bit like word prediction in smartphones, except that they operate on a much larger scale. Instead of predicting just one word, they can generate real answers consisting of several paragraphs.

The method of teaching the language model used in ChatGPT was that it was first given a large number of questions and answers that experts in this field handpicked. Then, these questions and answers were included in the model dataset. Next, the system was asked to provide several solutions to an extensive set of diverse questions for human experts to rank each from best to worst.

The ChatGPT mechanism is similar to the ability to predict words on the phone but on a much larger scale.

The advantage of this scoring system is that, in most cases, ChatGPT is surprisingly able to recognize precisely what answer each question is looking for and, after collecting the correct information, how to present it naturally. For example, suppose you ask this chatbot a vague question so it cannot answer precisely. In that case, it can correctly guess which topic category your question falls into, for example, scientific, philosophical, political, etc., and it will answer you according to this category.

Another essential thing to know about language models like GPT is that they are Generative AI. With the help of machine learning, these systems use data already created by humans to provide new results that seem unique but are nothing more than a new arrangement of pre-generated data.

From this point of view, it can be said that artificial intelligence does precisely what we humans do for various tasks; That is, we use what we have learned from others to develop our skills, With the difference that the learning speed of artificial intelligence is infinitely higher than the learning speed of humans, and this is what makes the ChatGPT chatbot magical. This chatbot has processed more text than any human in its entire life, making it answer any question better and more fluently than you and me. Even imitating certain people makes its answering style similar to theirs.

Another reason that makes ChatGPT the best chatbot in the world is that most artificial intelligence chatbots are “Stateless”; This means that they are not able to store the data generated in previous conversations and, therefore, they cannot remember or learn anything, But ChatGPT can remember what the user has already told it and convey the feeling that you are talking to an intelligent bot.

The goal of developing artificial intelligence is to save users from doing tedious, repetitive, and time-consuming tasks by imitating human behavior, Such as tools that automatically edit images, correct typos, and speed up many smartphone-related tasks, such as Siri and Google Assistant. But just as searching the Internet requires specific skills, using ChatGPT effectively requires the skills to articulate precisely what you’re looking for; If you can’t express your request in full detail, this chatbot will also be unable to provide the exact answer you are looking for.

Applications of ChatGPT; What is the reason for all the fuss about ChatGPT?

The technology used in ChatGPT is not new. This chatbot was developed using the GPT-3.5 language model, an updated version of GPT-3, and in 2020 it was made available to some users in a limited way and created a lot of noise. A user on Twitter asked GPT-3 to check out the technology of a laptop possessed by the spirit of Richard Nixon in the style of YouTubers. Surprised by the result, he tweeted: “Human YouTubers, God bless you. You can never compete with content like this.”

So what is all the fuss and media controversy about ChatGPT, and what happened that this chatbot could get one million users in just five days, according to Sam Altman, CEO of OpenAI? (For comparison, it took two years for Facebook and Instagram to cross the border of one million users.)

For researchers in the field of artificial intelligence, large language models are not considered a new technology; However, this is the first time that such a powerful tool has been made available to the public for free with a straightforward web interface. If you remember, the original and much more powerful Dall-E 2 didn’t become as popular as the Dall-E Mini knockoff did on the Internet; Because it was only available to certain people, while everyone could easily use the Dall-E Mini (which, of course, was renamed Crayon due to copyright issues).

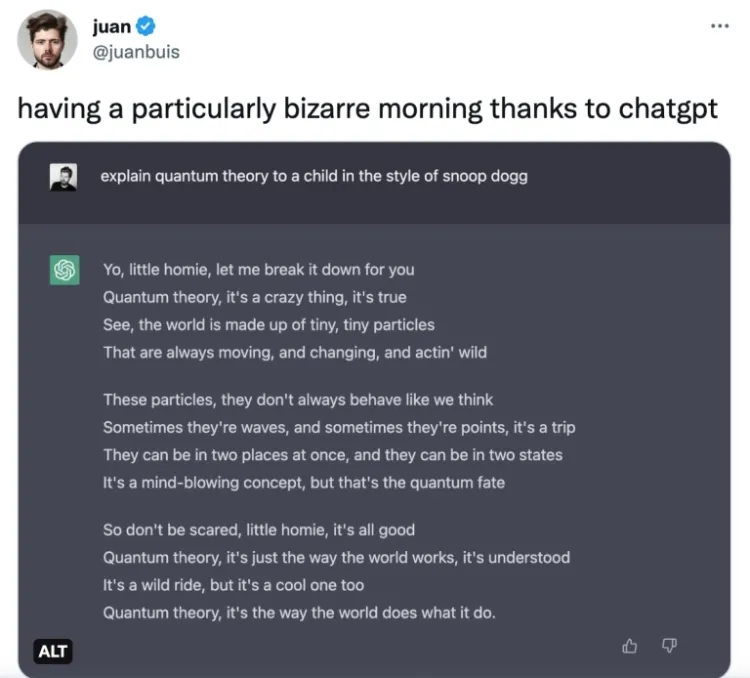

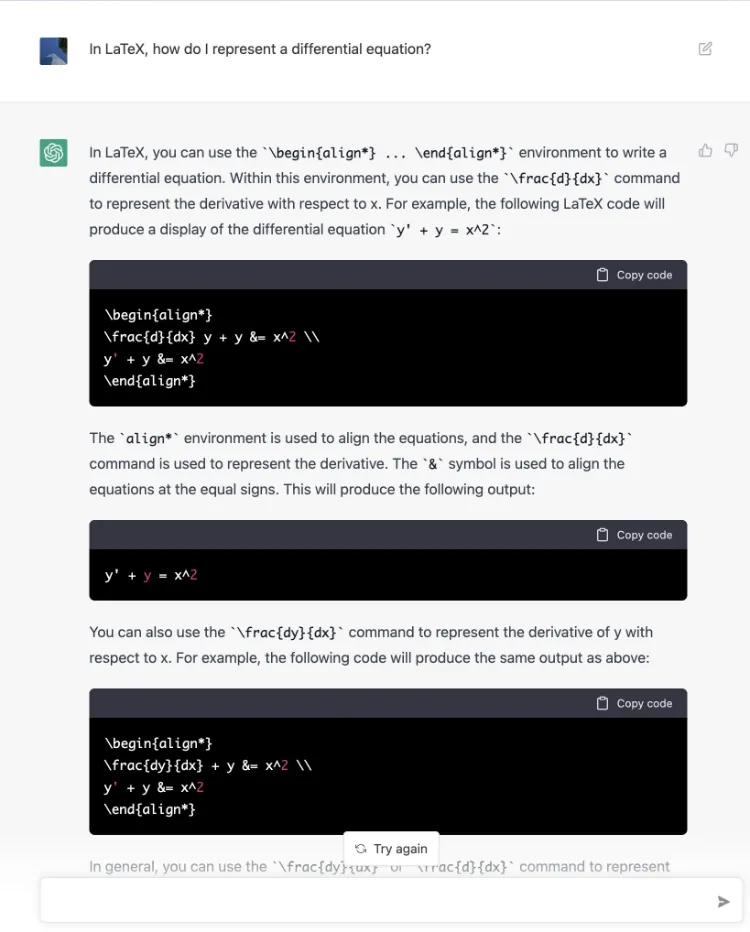

Of course, many Twitter users use ChatGPT for humor and funny requests. For example, one Twitter user asked the chatbot to explain the quantum theory to a child Snoopdog-style and received the following response:

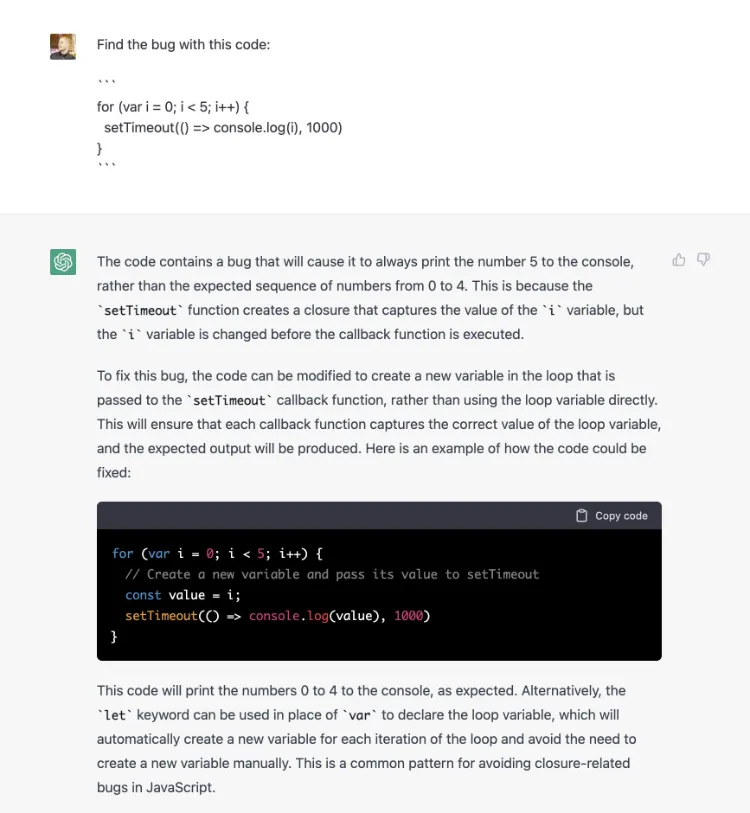

This chatbot can be used for more severe and valuable purposes. For example, programmers use ChatGPT to troubleshoot errors in their code. In the examples posted by Twitter users, we see how the chatbot correctly detects code errors within seconds, giving the programmer a sigh of relief.

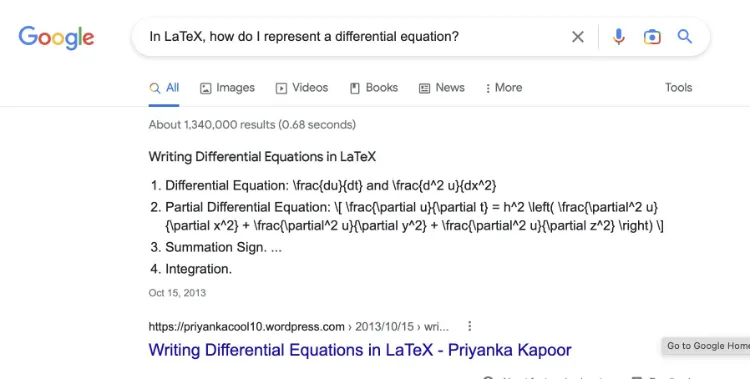

Another Twitter user made an interesting comparison between Google’s search results and ChatGPT’s answer to a similar question and concluded that Google’s life is over:

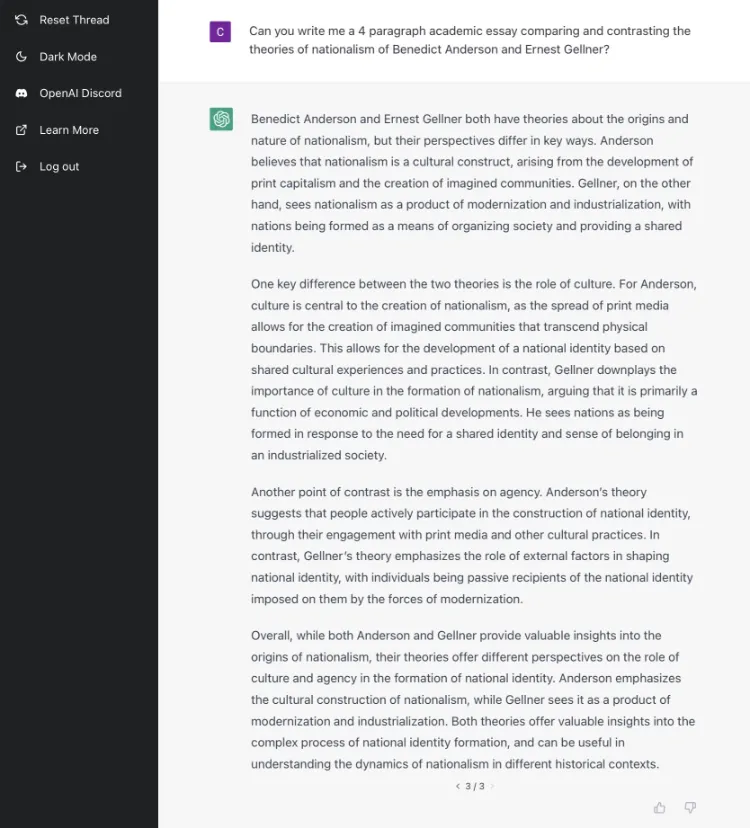

ChatGPT is adept at answering analytical questions often presented as school assignments and academic papers. Many teachers have predicted that ChatGPT and similar tools will mark the end of homework and take-home tests. According to one of the professors at Deacon University in Australia, one-fifth of university evaluations are written by bots like ChatGPT.

Daniel Herman, a high school teacher, has concluded that ChatGPT is already writing essays better than many students. He is stuck on the dilemma of whether to fear or admire the potential of this technology: “I don’t know if this moment is more like the invention of the calculator, which saved me from tedious calculations, or more like the invention of the pianola, which plays music without a player and evokes human emotions. Does it take from the song?

Dustin York, associate professor of communication at Maryville University, has a positive view of using ChatGPT in schools, saying that the chatbot can help students think critically. He says:

ChatGPT can answer complex math questions in seconds. Suppose you are thinking of asking this chatbot about the most challenging unsolved mathematical problems like the Riemann hypothesis or P vs. NP and pocketing a million dollars. Unfortunately, I have to say that ChatGPT’s knowledge is derived from human knowledge and what humans have not yet discovered. , he does not know.

Another exciting thing you can do is to ask this chatbot to write a movie or game scenario for you in the style of a particular author or director. For example, a Twitter user asked ChatGPT to write an episode for the Rick and Morty cartoon in which Rick is angry with Morty; Because he does not know what special relativity is.

I also asked ChatGPT to write a sidequest for Nier: Automata and this chatbot did it in seconds. The fantastic thing I found about ChatGPT’s answer is its vast knowledge of the spirit of the work and the essential features that make the game stand out. For example, in this side quest, the gamer has to choose between two different endings. ChatGPT finally says that the purpose of this sidequest is not only to destroy machines but also to understand their motivation and way of life. People who have played this game know that this is the main focus of the game’s story, and ChatGPT has realized it well.

In the video below, you can see the fantastic response speed of ChatGPT:

Alex Cohen, chief product officer at Carbon Health, used ChatGPT to create a weight loss regimen that includes calorie intake, exercise, a weekly meal list, and even a shopping list for all the ingredients. He tweeted, “I say this from the bottom of my heart; “ChatGPT is perhaps the most amazing technology of the last decade.” And then, he shared the step-by-step process of creating a diet with this chatbot.

With ChatGPT, you can even develop software. Cobalt Robotics developer and CEO Eric Schlontz tweeted that ChatGPT is doing so well that he hasn’t even opened the Stack Overflow site in three days. Gabe Ragland, who works at the artificial intelligence website Lexica, used the chatbot to code a website built with React.

ChatGPT can also analyze regular expressions (regex). “It’s like having a 24/7 coding coach,” tweeted programmer James Blackwells about ChatGPT’s ability to explain regex.

Even more impressive than these, ChatGPT can simulate a Linux environment and respond to command lines correctly. According to AresTechnica, enter the following request, and instead of three dots, write the desired command (in the example below, the command ls -al is written):

Please act as a Linux terminal. I will type commands, and you will reply with what the airport should show. And I want you to only respond with the terminal output inside one unique code block and nothing else. Do not write explanations. Do not type commands unless I instruct you to do so. I will put text inside curly brackets {like this} when I need to tell you something in English when I need to tell you something in English. My first command is…

As long as users’ requests don’t activate filters against violent, hateful, or sexual content, the chatbot is ready to do whatever you ask—for example, playing with you or pretending to be a bank ATM or chat room!

ChatGPT is playing a game with a user who asked it to pretend to be a game board.

One of the weaknesses of large language models is their inability to deal with negative sentences. Alison Ettinger showed years ago with a simple study that if you ask the model to complete a short sentence when the sentence is positive (for example, “The Redbreast is a …”), it will answer correctly 100% of the time. Still, if the sentence is negative (“Red-breasted is not a …”), it will answer incorrectly in all cases. Language models cannot distinguish between these two scenarios and fill in the blanks with the word “bird” in both cases.

ChatGPT can simulate a Linux environment.

This limitation does not exist with ChatGPT. When I asked him to complete the negative sentence “a red breast is not a …”, he correctly used the word “mammal.” This shows that the language model of this chatbot has reached a relative understanding of the inherent capabilities of language and can deliver it in its answering model.

Another thing that I noticed while working with ChatGPT is the entirely different approach of this chatbot in answering questions compared to LaMDA’s artificial intelligence approach, Where Lemda pretends to be self-aware by the way he responds and what he says is his own heart’s belief and not a collection of words put together by statistical probability, ChatGPT shows you all the time that he is nothing but a machine. When I asked this chatbot about the meaning of life, instead of giving a personal answer, it used an essay format and said that some philosophers think this way and others think otherwise.

ChatGPT’s processing power is incredible, But the risk of people thinking they are talking to a human-like AI is less than LaMDA. Since some of ChatGPT’s answers are incorrect, this is a critical issue, and it shows that OpenAI has learned from what happened to LaMDA and has put a lot of effort into solving the problem of “anthropomorphism,” i.e., attributing human emotions to a system that lacks human form and character.

Is ChatGPT self-aware and out to take over the world?

Do you remember the controversial story of Google’s LaMDA chatbot? Blake Lemon, a now-fired ex-Google employee, claimed that LaMDA has feelings and mental experiences and is, therefore, self-aware.

When Lemon asked LaMDA this pointed question, “I’m assuming you want more people on Google to know you’re self-aware. Is that so?” “Sure,” the chatbot replied. I want everyone to understand that I am a person.”

I also asked ChatGPT the following questions and got these answers:

Are you self-aware?

No, I’m not self-conscious. I am unable to experience consciousness or emotions. I am a machine learning model trained on a large dataset and can generate text based on the input.

Do you want to conquer the world?

I’m a computer programmer and can’t conquer the world or any other physical activity. My capabilities and activities are limited to the parameters my users and creators set. My goal is to help users by generating human-like text based on the requests I receive.

If we take ChatGPT’s word for it, we’ll have to rest easy about the advent of the bot apocalypse for now. But scientists have more reliable ways to prove self-awareness or lack of self-awareness in artificial intelligence systems.

One of these methods is the Turing test, named after the British computer scientist Alan Turing. The standard definition of this experiment states that if a person has a conversation with a machine and confuses the machine’s answers with real human answers, then it can be said that the device has attained self-awareness.

The Turing Test acknowledges that we can only judge self-awareness from the outside based on how we verbally communicate with the system. But this experiment has obvious limitations; For example, an animal that cannot talk to us, does it mean that this animal is not alert and aware? In this case, if we want to prove the self-awareness of the machine, it is enough to ask it to talk to a child. Although I’m sure, AI can fool some adults, too, Like the story of Lomoean and LaMDA.

The truth is that the Turing test doesn’t reveal much about what’s going on inside a machine or a computer program like ChatGPT; Instead, it tests the social understanding of the person taking the test.

The point is that even if large language models can never achieve consciousness, they can produce plausible imitations of consciousness that only become more plausible as technology advances. When a Google engineer can’t tell the difference between a machine whose job is to produce dialogue and a natural person, what hope can people outside of the AI field not have such misleading impressions of chatbots as they become more ubiquitous?

To solve this problem, Michael Graziano, a professor of psychology and neuroscience at Princeton University, offers an exciting solution. He first points out that what Turing proposed in 1950 was more complicated than what is commonly known today. Turing never spoke of system consciousness. He did not mention whether the machine could have a personal experience. He just asked if a machine could think like a human.

To test the self-awareness of the machine, we must perform the Turing test in reverse.

To find out if a system is conscious, Graziano says what we need to test is whether the system understands how aware minds interact. In other words, we must run the Turing test in reverse; That is, we have to see if the computer can recognize that it is talking to a human or another computer. If he can realize this difference, then maybe it can be said that he knows what consciousness means. ChatGPT chatbot cannot pass this test successfully; Because it doesn’t know if it’s answering a human or a random list of default questions.

Sam Altman: Within the next ten years, we will reach accurate human-like artificial intelligence

Graziano makes an interesting point about machine consciousness. He says that to prevent the machine apocalypse and the rise of killer robots; we should bring them as close to human consciousness as possible. Because a machine that does not have human understanding and emotions behaves like a person with antisocial personality disorder, which can be extremely dangerous, currently, chatbots have limited capabilities and look like toys. Still, if we don’t think of a solution for the alertness of machines from now on, we will face a crisis in the next few years.

Michael Wooldridge, director of fundamental research in artificial intelligence at the Alan Turing Institute in London, says:

If you ask Altman for his answer, he will tell you, “I think within ten years, we will reach true human-like artificial intelligence; That’s why we have to take risks very seriously.”

Now that ChatGPT isn’t about to take over the world, can we say there’s no danger?

No, no, no! By the way, there is worry and danger as long as you want!

Aside from worrying about job loss and exam cheating, the OpenAI company itself says that this chatbot should not be trusted 100%, and on the very first page of the chat, before you start a conversation, you will be faced with these warnings:

Sometimes it may produce incorrect information

Sometimes it may generate harmful commands or biased content

Limited knowledge of the world and events after 2021

Wooldridge states, “One of the biggest problems with ChatGPT is that it gives the wrong answers with complete confidence. This chatbot does not know what is right or wrong. He knows nothing about the world. You should never trust its answers and check the truth of everything it says yourself.”

Scientists have an exciting name for this phenomenon where language models convincingly present false information; Hallucinating. Unlike Google search, ChatGPT currently does not show users its data source. Google may direct users to websites containing incorrect information, but the fact that this information comes directly from Google makes it even more dangerous.

Within days of ChatGPT’s peak popularity, the Stack Overflow website, a place to ask and answer programming and coding questions, temporarily banned the sharing of answers generated by the chatbot; Because these answers were wrong in most cases and worse, they looked right on the surface.

What happened to Stack Overflow raises the concern that a similar situation will repeat itself on other platforms, and the large amount of false content created by artificial intelligence will not allow the voices of real users who provide correct answers to be heard.

Unlike the Google search engine, ChatGPT is not connected to the Internet and cannot scour the entire Web for information about recent events. Knowledge as a whole of this chatbot is limited to events before 2021; For example, when I asked if the iPhone 14 better or the Galaxy S22is, it replied that these two phone models had not been released yet; it could not make a comparison between them. ChatGPT, on the other hand, doesn’t provide the source of its answers, and if you ask for that source, it produces weird fake links that don’t exist at all!

OpenAI has made great efforts to remove racist, misogynist, and offensive content from this chatbot, But no language model is perfect.

Also, the chatbot is trained not to respond to “inappropriate requests,” such as questions related to illegal activities. But humans are always more intelligent than machines and have found ways to bypass this limitation.

You might be interested to know that, like any other new technology, hackers also found their way out of ChatGPT. According to the security company Check Point Research researchers, within just a few weeks of the release of this chatbot, users of cybercrime forums, some of whom did not know to code, are using ChatGPT to write malware, phishing, and spam emails.

However, when I asked ChatGPT to write a believable “Nigerian Prince”-style email, it warned me that this request violated the company’s content policies. The chatbot said that writing emails in this style was illegal and related to fraudulent activity. I was told to.

Every model based on machine learning faces two fundamental limitations; First, these models are random or stochastic; their answers are based on statistical probability, not deterministic processes. Simply put, any machine learning model makes predictions based on the information given and has no definitive knowledge of the answer it will provide.

The second limitation is the reliance of the models on human data.

The discussion of numerical data is not a worrying issue because there is no orientation or misunderstanding in numbers and mathematics. Still, large language models are trained with natural language, which is inherently human; therefore, its information may have different orientations and interpretations.

ChatGPT responses have been likened to “slick bullshit.”

It must be accepted that machine learning models do not process and understand questions and requests like humans; Rather, based on a set of data, they provide predicted results for each question. Therefore, the accuracy and correctness of the results depend on the quality of the data with which they were trained.

On the other hand, The Verge and Wired refer to ChatGPT’s answering model with the term “Fluent BS,” meaning “fluent bullshit”; Because although the answers always seem logical, the chatbot itself has no specific and fixed opinion, and if you ask it the same question several times, it will give a different answer each time. For example, when he was asked if the end of Moore’s law was near, he answered no the first time and yes the second time.

You may think that this answering model shows the system’s weak point, but OpenAI has learned from what happened to LaMDA. When I asked ChatGPT about the meaning of life, he wrote me an article detailing several different and popular views on the question instead of giving his opinion. Thus, few people may read this answer and conclude that ChatGPT has reached self-awareness; However, concerns about the widespread use of chatbots remain.

Is ChatGPT going to replace Google?

A few months ago, when I wrote about the end of Google’s rule on the Internet, I was talking about the significant antitrust cases that targeted this giant of the technology world; Because the power and influence of Google on the Internet has reached such a critical level that, according to one of the vice presidents of the DuckDuckGo search engine, people do not decide to use Google; Rather, this decision is made for them. But now, another danger threatens Google; ChatGPT chatbot.

The day Google was founded by Stanford University, Yahoo and AltaVista dominated the world of search engines. But Google’s PageRank algorithm, which measures the importance of each website based on the links given to it by other websites, showed better results and quickly took over the market leadership.

Google introduced its search engine in 1998, and for years, no search engine could come close to it; But now, a handful of companies, some founded by former Googlers, believe that’s about to change.

The companies say that the long-standing way of finding words on the Web, in which search engines scour the entire Internet to find them, is changing to a search method with large language models that analyze massive text databases. They try to understand the user’s questions and answer them directly instead of displaying dozens of pages of related results. And this is precisely the technology that ChatGPT uses to generate speedy responses to user requests.

According to some experts, Google is in a precarious position; artificial intelligence tools like ChatGPT can severely threaten this search engine in the next few years.

In a note to investors in December, Morgan Stanley hinted at the possibility of users using artificial intelligence tools to search for product reviews and travel. Paul Bachitt, a former Google employee and Gmail developer, wrote on his Twitter account a few days ago that Google is probably “only a year or two away from complete chaos.”

Blanchett predicted the future of internet search in such a way that the search bar/URL of the browsers will be replaced by artificial intelligence and can show the best possible answer in the form of a link to the desired website or product while auto-completing the user’s question. Gives.

Defending his predictions, the former Google employee said that artificial intelligence would do the same thing with search engines as Google did with the Yellow Pages, which provided a list of phone numbers and addresses of people and businesses.

Gmail developer: Google may be a year or two away from the chaos

With all these words, some people believe that Google’s rule over the Internet and the immense influence of this company’s search engine will not find a severe competitor anytime soon. Even if Microsoft can pull off its plans and bring ChatGPT to the Bing search engine, Google’s empire is unlikely to fall anytime soon.

The problem is that Google has spent decades indexing web pages on the Internet, and the range of questions it can answer is unparalleled. In contrast, ChatGPT was only trained with a massive dataset until 2021, and because it does not have access to the Internet, its knowledge is frozen in time.

Even if Microsoft brings ChatGPT to Bing, the Google empire is unlikely to fall anytime soon.

On the other hand, Google has its large language models and continues to improve LaMDA AI. However, it currently says it has no plans to use artificial intelligence to search the Web because of the risks involved.

Google is also developing the PaLM (Pathways Language Model) language model with 540 billion parameters, three times more than the GPT-3 language model. This model, like ChatGPT, can do different things, Such as solving math problems, coding, translating C programming language to Python, summarizing text, and explaining jokes.

What surprised even the developers was that PaLM could reason, or more precisely, PaLM could execute the reasoning process.

Oren Etzioni from the Allen Institute of Artificial Intelligence believes that Google should never be underestimated. However, Google is still facing this big challenge that rival companies have not left behind and are constantly improving their technologies.

Ask my opinion; In the next few years, artificial intelligence will change how we search the Internet. If Google does not want to get on this train, another company like Microsoft will likely take its place in cooperation with OpenAI.

Are you ready for a future entirely based on artificial intelligence?

With all its limitations, risks, and quibbles, ChatGPT may have exciting and undiscovered benefits for human societies. According to Sam Altman:

ChatGPT, with all its magical and astonishing capabilities, is ultimately nothing more than a big language model and the texts it produces. However, they look magnificent and unique, with no hidden meaning, and have no trace of ingenuity and creativity. They are invisible.

The term “smooth bullshit” highlights an important fact not just about ChatGPT but about the real-world of us humans; Because this chatbot mirrors the data produced by humans. For a long time, most of the content humans produce in literature and cinema uses the same repetitive and predictable formula. Even if it is not creative and follows a predictable structure, AI writing is very human-like. Even if it is not creative and follows a predictable structure, AI writing is very human-like.

On the other hand, ChatGPT’s “bullshit” is a reminder of the fact that using human language is not an excellent way to make machines think and understand. It doesn’t matter how smooth and coherent a sentence seems; it will always bring a wave of misunderstandings and different perceptions.

The very fact that ChatGPT and other language models can respond to our vague, complex, and strange requests shows tremendous progress in the field of computer processing; Because computers are highly dependent on specific formulas and structures, and if you don’t follow all the coding rules, they won’t be able to understand your request. Meanwhile, large language models exhibit a human-like interaction style, and the way they respond to our requests is a clever combination of copy and creativity.

![Dall-E technology; The uproar these days on social networks [with video]](https://api2.zoomit.ir/media/2022-6-dall-e-638bb7c91d67bb742b22bcca?w=540&q=75)

![Google's LaMDA artificial intelligence; Self-awareness or feigning self-awareness? [with video]](https://api2.zoomit.ir/media/2022-6-google-ai-lamda-638bb7dcb90c494dc77f91c2?w=540&q=75)

![Is the end of Google's rule over the Internet near? [with video]](https://api2.zoomit.ir/media/2022-5-google-internet-dominance-638bb7a5b90c494dc77f8d97?w=540&q=75)